After playing around with QR code corruptions in “Tips on Creating the Smallest Possible QR Codes”, I became curious about generating a few animated variants that are valid on every frame.

The perfect algorithm candidates for this are simple cellular automata, which are models of computation on a grid that repeatedly update the state of each cell based on the arrangement of its neighbors. For instance, a cell might “live” or “die” depending on the state of its nearby cells.

On top of this, I also allowed a few caveats for more interesting patterns and ease of generation.

- I ran the automata on a grid nine times finer than the modules of a standard QR code

- I threw in short periods of noisier updates where the automata’s rules were loosened, yielding behavior akin to a Monte Carlo simulated anneal

- I evaluated the automata’s update rules in a random order rather than simultaneously

- In later passes, I allowed updates to be completely rejected if they created an invalid QR code, effectively altering the random sampling order of cell updates

Here, we’ll start with a very simple method and then progressively add in enhancements and discuss the issues I ran into along the way.

The simple, fixed centers method#

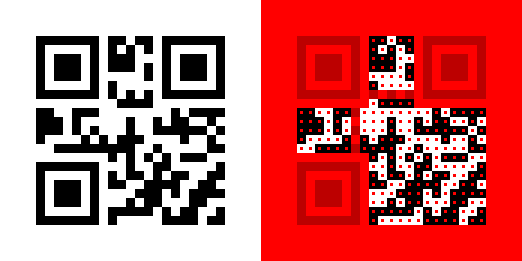

The Python package “segno” offers a somewhat standard method to generate QR codes that incorporate colorful images or GIFs. Their solution is to fix all the position, tracking, and timing modules as well as the center portion of every other module. Such a restriction is shown below.

The idea here is that most QR code readers sample from the estimated center of each module, so that portion is really all that is needed for readability as long the position and orientation of the code can be accurately determined.

The resulting codes are typically valid, although there are cases with complex patterns that might confuse smartphone readers. Despite this, they can generally be scanned with some effort, including the ones below which are maximally filled with white and black.

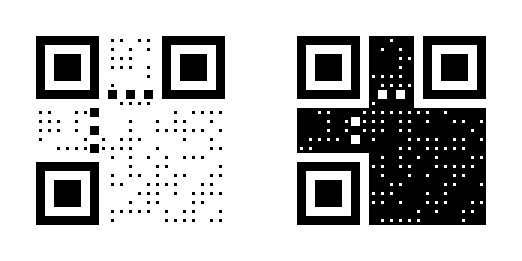

Running a few simple automata under these constraints indeed results in valid QR codes. The patterns are also pretty interesting to look at, especially since the fixed regions influence neighboring pixels through the same update rules as the rest of the grid.

To me, two particular columns stand out: the right-most one, which essentially dithers the QR code, and the second one, which creates smooth, gyrating blobs.

An aside about halting update rules#

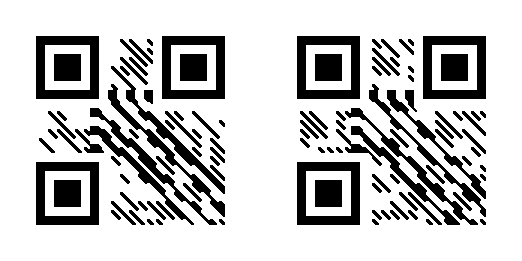

The examples presented here do not reach a final halting state and continue indefinitely. However, you can create compelling patterns using update rules that do lead to a final, static state.

For example, I accidentally stumbled upon one that, in the limit, was equivalent to copying states diagonally down and to the right whenever possible. Despite being mostly uninteresting from an animation perspective, I am quite happy with how these turned out and their relative robustness when scanning.

Removing pixel restrictions by explicitly testing for QR code validity#

While segno’s strategy is effective, it is also pretty heavy-handed. If you’ve ever seen artistic QR codes in shops or online, it’s apparent that you don’t actually need to keep the tracking modules fixed. Moreover, the built-in error correction should allow us to completely alter some of the modules without compromising readability.

As such, we could simply try testing the validity of the QR code on each modification. If it ever becomes invalid, we’ll just reject the update and try again.

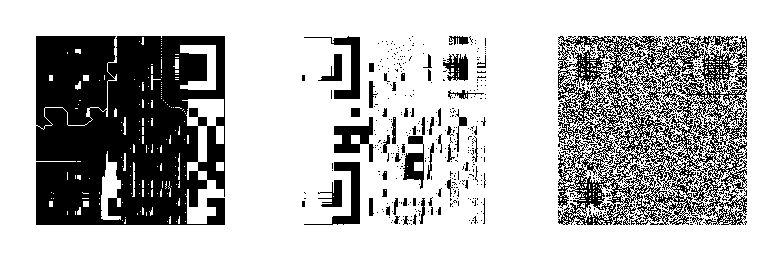

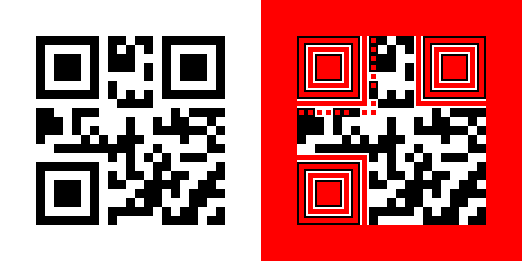

However, if you try this you’ll quickly run into the issue that automated QR code readers are way too lenient!1 Below, I’ve included three QR codes that are barely recognizable, but still technically valid according to some of the most popular Python decoders.

Ultimately, randomized updates will exploit every little idiosyncrasy of these decoding algorithms, yielding QR codes that are entirely unreadable in real-world situations.

On top of this, smartphone manufacturers like Apple, Google, and Samsung use proprietary and opaque decoding implementations in their devices. It seems extremely difficult in general to determine what they will be able to read and which patterns will confuse them. Their variety of lenses, auto-focus, auto-exposure, and other post-processing techniques only further complicate the matter.

Improving QR code readability with robustness filters#

To overcome these challenges, we need to enhance the QR codes to be more robust than strictly necessary.

One effective technique I settled on was ensuring that the QR code remains valid after running a set of image filters. Rank filters that dilate and erode the image proved to be particularly effective for this purpose, a few of which are visualized below.

This intuitively makes sense as it prevents the readers from placing too much emphasis on small regions of the code. Indeed, since we’re reading directly from a lossless, undistorted image, the readers are free to just look at the center-most pixel in each module, which requires far more accuracy than we are likely to get in practice.

I also took the liberty of fixing the inner portions of the format information in the upper left and the tracking modules to prevent them from being completely obliterated by the automata’s random updates. This adjustment significantly improved the overall detection and readability on smartphones.

This looser restriction is visualized below, and you should note how much less of the total QR code is off-limits to the automata compared to the initial method.

Importantly, this technique allows for entire modules to be corrupted, provided that the error correction can restore the original data. Moreover, the tracking modules can be corrupted slightly, which provides a more artistic bent to overall QR code.

Running the automata now gives us the examples below, and I was able to successfully scan them all with an iPhone, Google Lens, Samsung’s camera, and others.

However, it’s important to note that this method effectively creates a few fixed areas of 5x5 pixels to overcome the filters. This is larger than the fixed regions of the simpler method. Unfortunately, a set of 3x3 filters did not create codes that were robust enough for my liking.

I also explored blurring filters, rotations, and various resizing algorithms, but they didn’t prove as effective as rank filters. Similarly, obscuring regions (i.e., repeatedly cutting out various parts of the image to increase redundancy and prevent dependence on specific modules) did not increase robustness as much as I would have hoped.

Ultimately, I think this could be greatly improved upon, particularly with more insight into common QR code reader implementations and more realistic distortions. That said, I am relatively happy with the result as it stands now, especially since I have already spent thousands of compute hours thus far in my experiments.

See also and references#

- The earlier post about “Tips on Creating the Smallest Possible QR Codes” and the relevant links therein.

- The Wikipedia articles on cellular automata and simulated annealing.

This is clearly intentional in order to improve their decoding robustness, but it is detrimental for our purposes. ↩︎